Note

Click here to download the full example code

Harmonize HBN data using ComBat

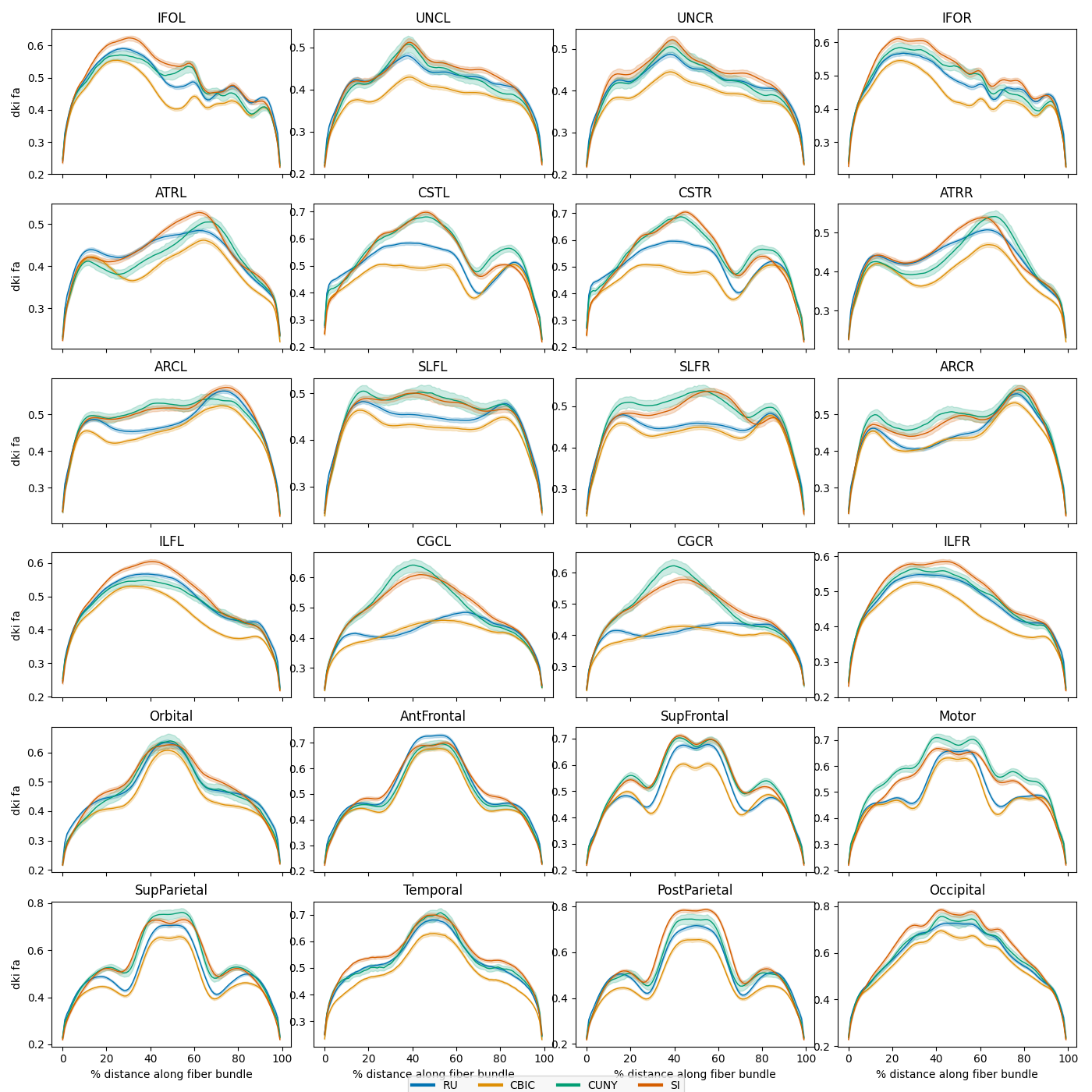

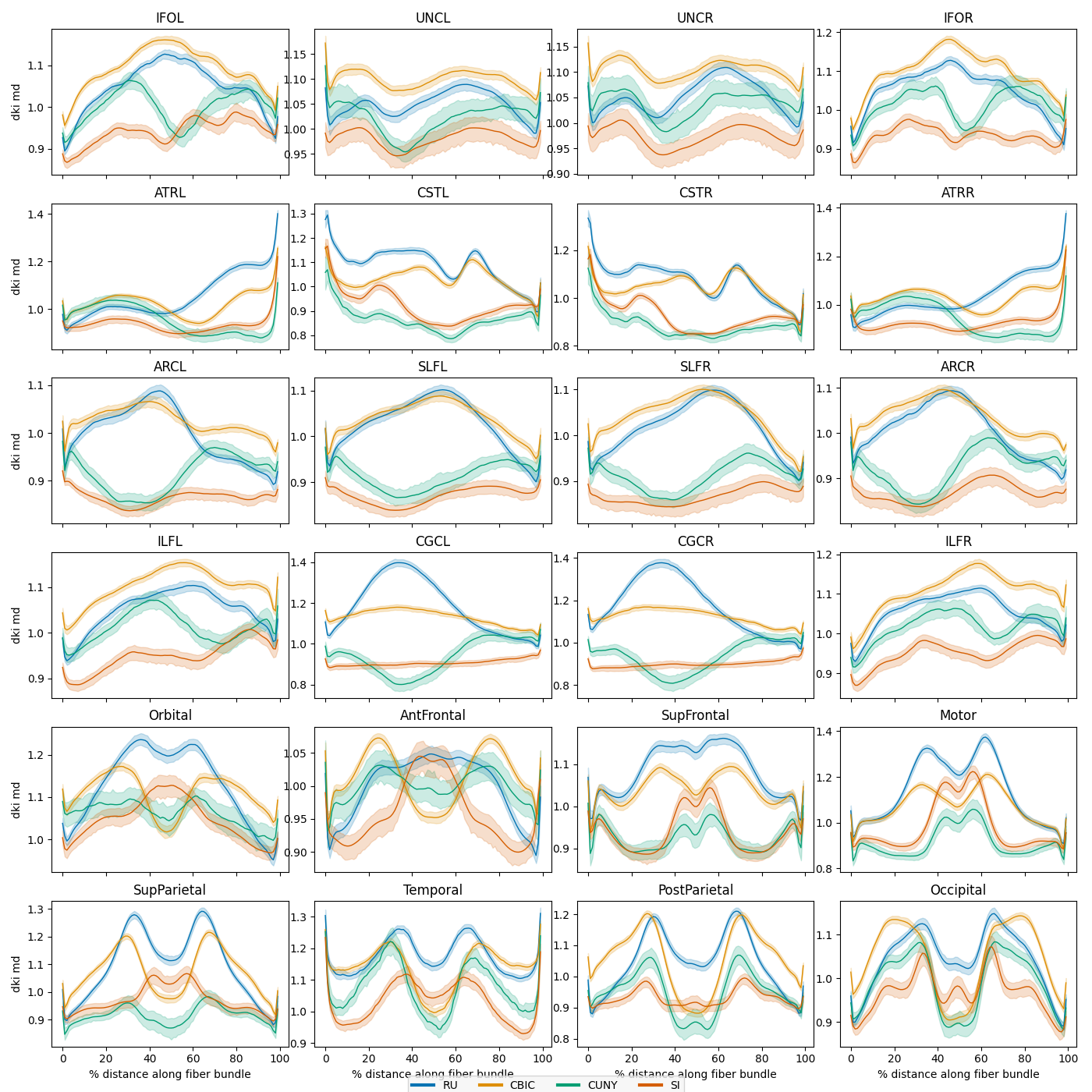

This example loads AFQ data from the Healthy Brain Network (HBN) preprocessed diffusion derivatives 1. The HBN is a landmark pediatric mental health study. Over the course of the study, it will collect diffusion MRI data from approximately 5,000 children and adolescents. We recently processed the available data from over 2,000 of these subjects, and provide the tract profiles from this dataset, which can be downloaded from AWS thanks to [INDI](http://fcon_1000.projects.nitrc.org/).

We first load the data by using the AFQDataset.from_files() static method

and supplying AWS S3 URIs instead of local file names. We then impute missing

values and plot the mean bundle profiles by scanning site, noting that there are

substantial site differences. Lastly, we harmonize the site differences using

NeuroComBat 2 and plot the harmonized bundle profiles to verify that the site

differences have been removed.

- 1

Adam Richie-Halford, Matthew Cieslak, Lei Ai, Sendy Caffarra, Sydney Covitz, Alexandre R. Franco, Iliana I. Karipidis, John Kruper, Michael Milham, Bárbara Avelar-Pereira, Ethan Roy, Valerie J. Sydnor, Jason Yeatman, The Fibr Community Science Consortium, Theodore D. Satterthwaite, and Ariel Rokem, “An open, analysis-ready, and quality controlled resource for pediatric brain white-matter research” bioRxiv 2022.02.24.481303; doi: https://doi.org/10.1101/2022.02.24.481303

- 2

Jean-Philippe Fortin, Drew Parker, Birkan Tunc, Takanori Watanabe, Mark A Elliott, Kosha Ruparel, David R Roalf, Theodore D Satterthwaite, Ruben C Gur, Raquel E Gur, Robert T Schultz, Ragini Verma, Russell T Shinohara. “Harmonization Of Multi-Site Diffusion Tensor Imaging Data” NeuroImage, 161, 149-170, 2017; doi: https://doi.org/10.1016/j.neuroimage.2017.08.047

import numpy as np

from afqinsight import AFQDataset

from afqinsight.plot import plot_tract_profiles

from neurocombat_sklearn import CombatModel

from sklearn.impute import SimpleImputer

from sklearn.model_selection import train_test_split

Fetch the HBN data

The AFQDataset.from_files() static method expects a path to

nodes.csv and subjects.csv files, but these file paths can be remote

URLs or AWS S3 URIs. We’ll use S3 URIs to grab the HBN data. After dropping

participants with null phenotypic values, it has 1,867 participants.

dataset = AFQDataset.from_files(

fn_nodes="s3://fcp-indi/data/Projects/HBN/BIDS_curated/derivatives/afq/combined_tract_profiles.csv",

fn_subjects="s3://fcp-indi/data/Projects/HBN/BIDS_curated/derivatives/qsiprep/participants.tsv",

dwi_metrics=["dki_fa", "dki_md"],

target_cols=["age", "sex", "scan_site_id"],

label_encode_cols=["sex", "scan_site_id"],

index_col="subject_id",

)

dataset.drop_target_na()

print(dataset)

AFQDataset(n_samples=1867, n_features=4800, n_targets=3, targets=['age', 'sex', 'scan_site_id'])

Train / test split

We can use the dataset in the train_test_split() function just as we

would with an array.

dataset_train, dataset_test = train_test_split(dataset, test_size=0.5)

Impute missing values

Next we impute missing values using median imputation. We fit the imputer using the training set and then use it to transform both the training and test sets.

imputer = dataset_train.model_fit(SimpleImputer(strategy="median"))

dataset_train = dataset_train.model_transform(imputer)

dataset_test = dataset_test.model_transform(imputer)

Plot average bundle profiles by scan site

Next we plot the mean bundle profiles in the test set by scanning site. The

plot_tract_profiles() function takes as input an AFQDataset and

returns matplotlib figures displaying the mean bundle profile for each bundle

and metric, optionally grouped by a categorical or continuous variable.

site_figs = plot_tract_profiles(

X=dataset_test,

group_by=dataset_test.classes["scan_site_id"][dataset_test.y[:, 2].astype(int)],

group_by_name="Site",

figsize=(14, 14),

)

0%| | 0/2 [00:00<?, ?it/s]

0%| | 0/24 [00:00<?, ?it/s]

4%|4 | 1/24 [00:05<01:56, 5.07s/it]

8%|8 | 2/24 [00:10<01:52, 5.13s/it]

12%|#2 | 3/24 [00:15<01:48, 5.17s/it]

17%|#6 | 4/24 [00:20<01:41, 5.10s/it]

21%|## | 5/24 [00:25<01:37, 5.11s/it]

25%|##5 | 6/24 [00:30<01:32, 5.14s/it]

29%|##9 | 7/24 [00:35<01:26, 5.11s/it]

33%|###3 | 8/24 [00:40<01:20, 5.01s/it]

38%|###7 | 9/24 [00:45<01:14, 4.96s/it]

42%|####1 | 10/24 [00:50<01:08, 4.91s/it]

46%|####5 | 11/24 [00:55<01:03, 4.89s/it]

50%|##### | 12/24 [00:59<00:58, 4.87s/it]

54%|#####4 | 13/24 [01:04<00:53, 4.85s/it]

58%|#####8 | 14/24 [01:09<00:48, 4.85s/it]

62%|######2 | 15/24 [01:14<00:43, 4.85s/it]

67%|######6 | 16/24 [01:19<00:38, 4.85s/it]

71%|####### | 17/24 [01:24<00:33, 4.84s/it]

75%|#######5 | 18/24 [01:28<00:29, 4.84s/it]

79%|#######9 | 19/24 [01:33<00:24, 4.84s/it]

83%|########3 | 20/24 [01:38<00:19, 4.82s/it]

88%|########7 | 21/24 [01:43<00:14, 4.82s/it]

92%|#########1| 22/24 [01:48<00:09, 4.82s/it]

96%|#########5| 23/24 [01:53<00:04, 4.82s/it]

100%|##########| 24/24 [01:58<00:00, 4.87s/it]

100%|##########| 24/24 [01:58<00:00, 4.92s/it]

50%|##### | 1/2 [01:58<01:58, 118.89s/it]

0%| | 0/24 [00:00<?, ?it/s]

4%|4 | 1/24 [00:04<01:51, 4.84s/it]

8%|8 | 2/24 [00:09<01:47, 4.91s/it]

12%|#2 | 3/24 [00:14<01:42, 4.90s/it]

17%|#6 | 4/24 [00:19<01:37, 4.88s/it]

21%|## | 5/24 [00:24<01:32, 4.87s/it]

25%|##5 | 6/24 [00:29<01:27, 4.84s/it]

29%|##9 | 7/24 [00:34<01:22, 4.84s/it]

33%|###3 | 8/24 [00:38<01:17, 4.84s/it]

38%|###7 | 9/24 [00:43<01:12, 4.85s/it]

42%|####1 | 10/24 [00:48<01:07, 4.85s/it]

46%|####5 | 11/24 [00:53<01:03, 4.86s/it]

50%|##### | 12/24 [00:58<00:58, 4.88s/it]

54%|#####4 | 13/24 [01:03<00:53, 4.87s/it]

58%|#####8 | 14/24 [01:08<00:49, 4.92s/it]

62%|######2 | 15/24 [01:13<00:44, 4.98s/it]

67%|######6 | 16/24 [01:18<00:39, 4.95s/it]

71%|####### | 17/24 [01:23<00:34, 4.91s/it]

75%|#######5 | 18/24 [01:27<00:29, 4.89s/it]

79%|#######9 | 19/24 [01:32<00:24, 4.88s/it]

83%|########3 | 20/24 [01:37<00:19, 4.89s/it]

88%|########7 | 21/24 [01:42<00:14, 4.88s/it]

92%|#########1| 22/24 [01:47<00:09, 4.87s/it]

96%|#########5| 23/24 [01:52<00:04, 4.87s/it]

100%|##########| 24/24 [01:57<00:00, 4.89s/it]

100%|##########| 24/24 [01:57<00:00, 4.88s/it]

100%|##########| 2/2 [03:57<00:00, 118.49s/it]

100%|##########| 2/2 [03:57<00:00, 118.55s/it]

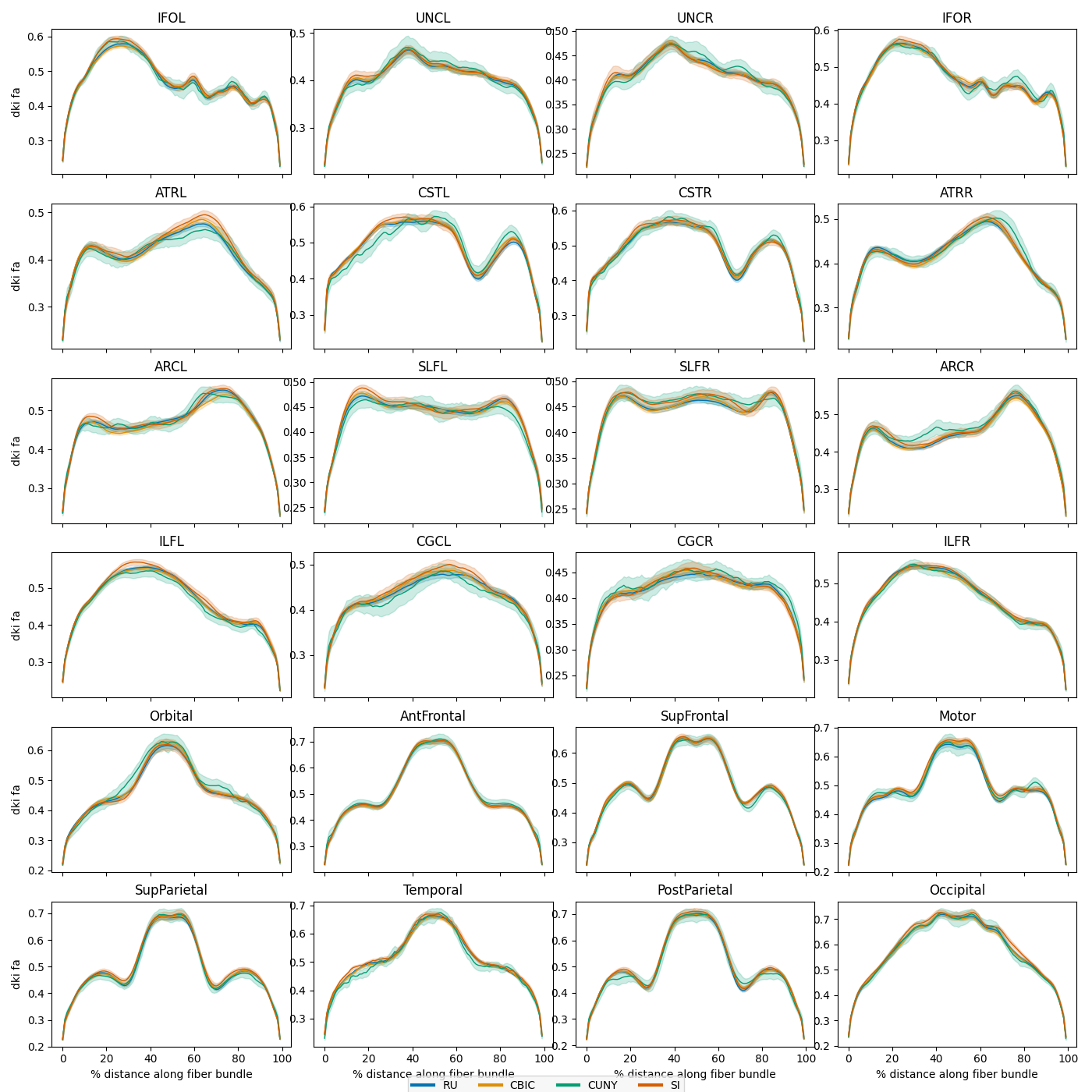

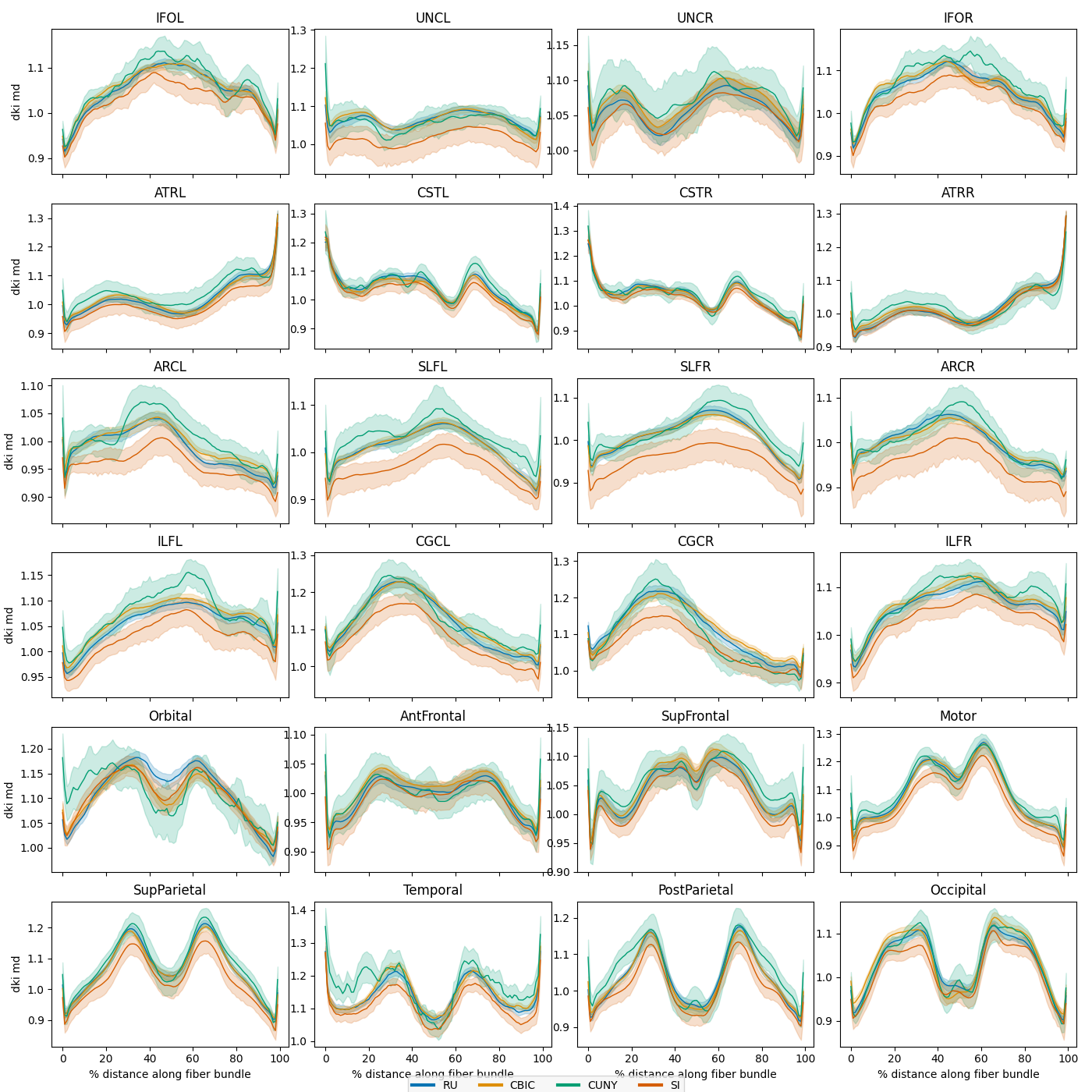

Harmonize the sites and replot

We can see that there are substantial scan site differences in both the FA and MD profiles. Let’s use neuroComBat to harmonize the site differences and then replot the mean bundle profiles.

N.B. We use the excellent neurocombat_sklearn package to apply ComBat to

our data. We love this library, however it is not fully compliant with the

scikit-learn transformer API, so we cannot use the

AFQDataset.model_fit_transform() method to apply this transformer to our

dataset. No problem! We can simply copy the unharmonized dataset into a new

variable and then overwrite the features of the new dataset with the ComBat

output.

Lastly, we replot the mean bundle profiles and confirm that ComBat did its job.

# Fit the ComBat transformer to the training set

combat = CombatModel()

combat.fit(

dataset_train.X,

dataset_train.y[:, 2][:, np.newaxis],

dataset_train.y[:, 1][:, np.newaxis],

dataset_train.y[:, 0][:, np.newaxis],

)

# And then transform a copy of the test set

harmonized_test = dataset_test.copy()

harmonized_test.X = combat.transform(

dataset_test.X,

dataset_test.y[:, 2][:, np.newaxis],

dataset_test.y[:, 1][:, np.newaxis],

dataset_test.y[:, 0][:, np.newaxis],

)

site_figs = plot_tract_profiles(

X=harmonized_test,

group_by=harmonized_test.classes["scan_site_id"][

harmonized_test.y[:, 2].astype(int)

],

group_by_name="Site",

figsize=(14, 14),

)

0%| | 0/2 [00:00<?, ?it/s]

0%| | 0/24 [00:00<?, ?it/s]

4%|4 | 1/24 [00:04<01:54, 4.99s/it]

8%|8 | 2/24 [00:09<01:49, 4.96s/it]

12%|#2 | 3/24 [00:14<01:43, 4.94s/it]

17%|#6 | 4/24 [00:19<01:38, 4.93s/it]

21%|## | 5/24 [00:24<01:34, 4.98s/it]

25%|##5 | 6/24 [00:29<01:30, 5.01s/it]

29%|##9 | 7/24 [00:34<01:25, 5.04s/it]

33%|###3 | 8/24 [00:40<01:20, 5.04s/it]

38%|###7 | 9/24 [00:45<01:15, 5.05s/it]

42%|####1 | 10/24 [00:50<01:10, 5.07s/it]

46%|####5 | 11/24 [00:55<01:05, 5.07s/it]

50%|##### | 12/24 [01:00<01:00, 5.05s/it]

54%|#####4 | 13/24 [01:05<00:55, 5.04s/it]

58%|#####8 | 14/24 [01:10<00:50, 5.07s/it]

62%|######2 | 15/24 [01:15<00:45, 5.10s/it]

67%|######6 | 16/24 [01:20<00:40, 5.12s/it]

71%|####### | 17/24 [01:25<00:35, 5.13s/it]

75%|#######5 | 18/24 [01:31<00:30, 5.14s/it]

79%|#######9 | 19/24 [01:36<00:25, 5.16s/it]

83%|########3 | 20/24 [01:41<00:20, 5.15s/it]

88%|########7 | 21/24 [01:46<00:15, 5.10s/it]

92%|#########1| 22/24 [01:51<00:10, 5.12s/it]

96%|#########5| 23/24 [01:56<00:05, 5.09s/it]

100%|##########| 24/24 [02:01<00:00, 5.03s/it]

100%|##########| 24/24 [02:01<00:00, 5.06s/it]

50%|##### | 1/2 [02:02<02:02, 122.36s/it]

0%| | 0/24 [00:00<?, ?it/s]

4%|4 | 1/24 [00:04<01:52, 4.88s/it]

8%|8 | 2/24 [00:09<01:47, 4.88s/it]

12%|#2 | 3/24 [00:14<01:41, 4.85s/it]

17%|#6 | 4/24 [00:19<01:37, 4.87s/it]

21%|## | 5/24 [00:24<01:32, 4.89s/it]

25%|##5 | 6/24 [00:29<01:27, 4.89s/it]

29%|##9 | 7/24 [00:34<01:24, 4.96s/it]

33%|###3 | 8/24 [00:39<01:20, 5.01s/it]

38%|###7 | 9/24 [00:44<01:14, 4.99s/it]

42%|####1 | 10/24 [00:49<01:10, 5.04s/it]

46%|####5 | 11/24 [00:54<01:05, 5.06s/it]

50%|##### | 12/24 [00:59<01:00, 5.07s/it]

54%|#####4 | 13/24 [01:04<00:54, 4.98s/it]

58%|#####8 | 14/24 [01:09<00:49, 4.94s/it]

62%|######2 | 15/24 [01:14<00:44, 4.97s/it]

67%|######6 | 16/24 [01:19<00:39, 5.00s/it]

71%|####### | 17/24 [01:24<00:34, 4.97s/it]

75%|#######5 | 18/24 [01:29<00:29, 4.93s/it]

79%|#######9 | 19/24 [01:34<00:24, 4.92s/it]

83%|########3 | 20/24 [01:39<00:19, 4.90s/it]

88%|########7 | 21/24 [01:43<00:14, 4.90s/it]

92%|#########1| 22/24 [01:48<00:09, 4.90s/it]

96%|#########5| 23/24 [01:53<00:04, 4.91s/it]

100%|##########| 24/24 [01:58<00:00, 4.90s/it]

100%|##########| 24/24 [01:58<00:00, 4.94s/it]

100%|##########| 2/2 [04:01<00:00, 120.67s/it]

100%|##########| 2/2 [04:01<00:00, 120.93s/it]

Total running time of the script: ( 8 minutes 45.792 seconds)